C21U researchers are excited about LLMs' potential to transform education by offering personalized learning experiences to students at scale. Their findings provide unique insights by analyzing individual learners' processes and outcomes and constructing predictive models to identify potential improvement areas and enhance learner engagement and success.

Quick overview of LLM and its impact on educational data

A Large Language Model or LLM is a machine learning model trained on big data capable of generating sophisticated answers to many questions. These models, such as OpenAI's GPT series and Google's BERT, are not merely sophisticated text generators. They acquire a nuanced understanding of context, enabling them to generate coherent and contextually relevant text across a wide range of domains. LLMs are “prompted” to accomplish a task and produce their best response based on the prompt. The utility of LLMs encompasses various fields, including translation, summarization, sentiment analysis, and even creative tasks like storytelling or writing lyrics. People use LLMs for two reasons: to curate information on a topic quickly and to generate original creative content in the form of (primarily) writing or other artistic expressions.

Researchers at the Center for 21st Century Universities (C21U) are excited about the potential of LLMs to transform education by providing personalized learning experiences to students at scale. As the demand for enhanced online education grows, LLMs have become powerful tools for gaining insights from the wealth of data learners generate. These models offer a unique means to analyze and understand individual learners’ learning processes and outcomes. Furthermore, LLMs enable the construction of predictive models that can help identify potential areas for improvement or inform the design of targeted interventions to enhance learner engagement and success.

Overview of C21U’s LLM Experiment

It has become crucial for higher education institutions to identify and develop students’ 21st-century skills, especially leadership, to adapt to the modern workforce and academia. Particularly, letters of recommendation (LORs) provide valuable third-party insights into applicants' backgrounds and leadership experiences. However, manually reviewing LORs to assess competencies requires considerable time and effort for admissions. To tackle this challenge, we aim to develop an AI-powered tool to analyze LORs submitted to an online master's program and detect clues for leadership skills. This tool will streamline the document review process, saving time and effort for the admission committee while offering constructive feedback for the professional development of the admitted applicants.

Initially utilizing the traditional classifiers, such as the Robustly Optimized BERT Pretraining Approach (RoBERTa) model, we achieved the current best F1-Score of 71% for identifying leadership skills based on ongoing research. Then, we further explored how to leverage the strengths of LLMs to extract relevant information about the applicants’ leadership attributes efficiently. LLMs can capture nuanced linguistic relationships, making them well-suited for extracting meaningful phrases from diverse text sources. Therefore, we aim to test how the LLM enables us to drill down into deeper details beyond the initial classifications and dramatically minimize the data processing we would have otherwise needed for phrase extraction and summarizing.

Our Approach to Prompt Engineering

Since an LLM is trained on an extremely large dataset, instead of reasoning about the task at hand, it is widely known that LLMs heavily focus on extracting relevant information from the data it was trained on. However, current literature on this topic suggests that there are methods through prompt engineering to get the LLM to demonstrate and apply reasoning skills. Prompt engineering for LLMs involves crafting specific input instructions to guide the model toward desired behaviors, such as reasoning or problem-solving. This process includes formulating prompts, analyzing their effectiveness, optimizing them iteratively, and evaluating their performance.

Our preliminary findings indicate that the simple approach of trusting an LLM to extract the correct phrases is not the best way to tackle this problem, as it extracts many irrelevant phrases. Specifically, we use Reasoning and Acting (ReAct) in this project. ReAct is a general paradigm that combines reasoning and acting with LLMs. By running multiple experiments, we found that using the ReAct paradigm, we can see how the LLM is “thinking” and breaking down the problem to arrive at its final output. Using this information, we can debug and improve our prompts.

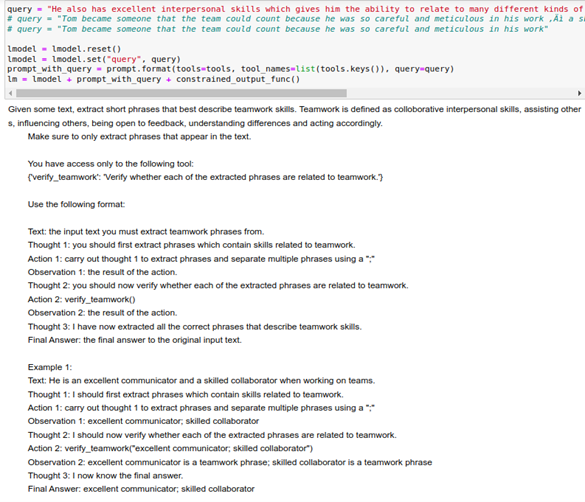

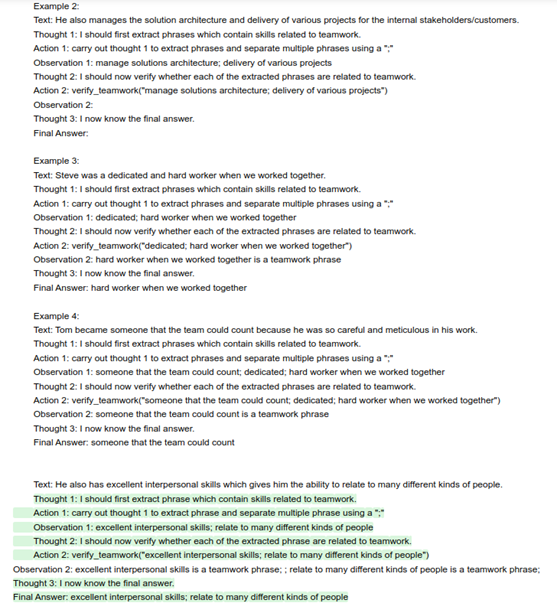

ReAct prompts LLMs to generate verbal reasoning traces and actions for a task. This allows the system to perform dynamic reasoning to create, maintain, and adjust plans for acting while enabling interaction with external environments (e.g., Wikipedia) to incorporate additional information into the reasoning. We first prompt the Llama2 model, a family of pre-trained and fine-tuned LLMs, to extract potential teamwork phrases from the text. Next, we use a different prompt to evaluate the potential teamwork phrases extracted in the previous step. We further incorporate a second LLM to validate the extracted phrases by the first LLM. While the first LLM extracts both correct and incorrect phrases, using this second LLM helps filter out the incorrect ones.

To illustrate the training process with the LLM, the output screenshot below shows how we prompted the LLM to learn from the given example phrases and extract teamwork phrases. Action_2 calls "verify_teamwork," which is a function that uses another LLM prompt to validate the extracted phrases. The text highlighted in green is the text that the LLM has generated in response to the prompt.

Lessons Learned

In conclusion, we experimented with multiple techniques to obtain a consistent output from the LLM. Leveraging Guidance proved instrumental in attaining consistent outputs from the LLM, offering a more efficient approach than conventional prompting or chaining methods. Additionally, employing ReAct prompting helped us craft effective prompts for the task as we could gain insights into the LLM’s thought process while working on the task. Lastly, using a separate LLM prompt for cross-validation to verify the phrases extracted by another LLM enhanced the reliability of our results. Moving forward, we are poised to extend the application of the LLM to diverse learning data sets, including its potential to detect students’ cognitive presence and critical thinking within online discussion forums.