(text and background only visible when logged in)

While self-regulated learning (SRL) is essential to student success, its recursive and social dimensions are difficult to capture with traditional models. This paper develops and analyzes an agent-based simulation model that integrates the SRL framework with task complexity and the Community of Inquiry (CoI) constructs of teaching presence (instructor feedback) and social presence (peer feedback) to understand learners’ performances better.

I. INTRODUCTION

Self-regulation refers to a process involving “self-generated thoughts, feelings, and behaviors that are oriented to attaining goals” ([1],p. 65). In learning contexts, self-regulated learners monitor and adjust cognitive, motivational, and behavioral strategies to achieve success. This process is especially critical in online and MOOC environments, where instructor presence is limited and learners must exercise greater autonomy. Prior research highlights the importance of fostering metacognitive awareness, strategic planning, and adaptive control to promote engagement and course completion in digital settings [2]. Yet learners often struggle to accurately self-monitor and sustain motivation, particularly when feedback and scaffolding are minimal. This underscores the need for external systems that provide responsive, personalized support for SRL.

Digital twins (DTs) are virtual representations of learners that mirror cognitive, behavioral, and affective states and offer new possibilities for modeling and supporting SRL. DTs are applied in education to enable individualized instruction, track performance, and simulate learning processes under varying conditions [3–5]. Advances like virtual reality and generative AI-powered digital twins show promise for boosting motivation and adaptive learning [4]. The Community of Inquiry (CoI) framework provides a complementary lens for understanding how teaching presence and social presence shape these learning processes in online environments [6]. Despite advances in DTs, little work has systematically explored how DT environments can model the dynamic interplay between SRL processes, instructional feedback, peer interaction, and task complexity. Understanding this is especially important in large-scale online programs where learners face high variability in task demands and available support.

The contribution of this study is the development of a novel agent-based simulation digital twin of self-regulated learners to examine how combinations of teaching and social presence, operationalized as instructor and peer feedback, interact with task complexity to shape learner performance. Our model simulates learners progressing through the SRL cycle, enabling controlled exploration of how feedback and complexity influence self-regulatory behaviors in open-access, self-paced learning MOOC contexts.

This paper is structured as follows. Section II describes the background, Section III details the simulation model, and Section IV presents the findings. Section V concludes the paper.

II. BACKGROUND

We aim to examine how teaching and social presence moderate learners’ progression through the SRL cycle and how task complexity shapes learners’ transitions across SRL phases. By modeling these dynamic interactions, the study contributes to the design of adaptive, data-informed systems

that simulate and support students’ learning processes.

A. Self-Regulated Learning and Co-Regulation

Winne and Hadwin’s SRL model conceptualizes learning as a cyclical, loosely sequenced process consisting of four key phases [7]. In Phase 1 (Task Definition), learners construct an understanding of the task’s requirements and learning context. Phase 2 (Goal Setting and Planning) involves setting specific learning goals and selecting strategies or tactics to achieve them. Phase 3 (Enactment of Study Tactics) is the implementation of those cognitive strategies (e.g., summarizing, note-taking, elaborating) while continuously monitoring one’s performance. Finally, Phase 4 (Adaptation) entails reflecting on outcomes by comparing performance against standards or goals and making adjustments to one’s approaches for future tasks [7]. Throughout this cycle, metacognitive monitoring and feedback play a central role: learners generate internal feedback by evaluating learning products against standards, which then informs strategy adjustments [7].

Building on this model, SRL has been extended to include co-regulation, the process through which learners support each other’s regulatory behaviors via socially shared interactions [8]. Co-regulation acts as a scaffold that helps learners internalize self-regulatory skills through feedback, modeling, or

prompting from peers, instructors, or digital tools. In collaborative learning contexts, learners often co-construct goals, monitor progress together, and engage in shared reflection and adaptation.

B. CoI Framework

The socially mediated view of SRL aligns closely with the CoI framework, which posits that meaningful online learning arises from the dynamic interaction of three core elements [9]. Teaching presence refers to the design, facilitation, and direction of learning activities that foster engagement and knowledge construction. Social presence captures learners’ ability to present themselves authentically, engage in open communication, and develop interpersonal relationships. Finally, cognitive presence reflects learners’ capacity to construct and confirm meaning through sustained reflection and dialogue [6]. Recent research emphasizes that teaching and social presence support collaborative learning and shape both self and co-regulated learning behaviors in online environments. Teaching presence provides structured guidance that supports

goal setting and adaptation, while social presence fosters peer feedback, motivation, and the co-construction of learning strategies [8].

C. DT in Education

DTs offer dynamic representations of learners and their interactions with learning environments, making them promising tools for modeling and supporting SRL [3–5]. For example, DT-based hybrid learning models have improved the prediction of learning outcomes and enabled timely interventions [3], while virtual reality-integrated DT systems enhance learner motivation and reduce cognitive load by synchronizing physical and virtual practice. In addition, generative AI-powered DTs provide scalable, adaptive learning environments aligned with learners’ cognitive stages and developmental needs [4], and digital student profiles constructed through DTs offer actionable insights for instructors and institutions, enabling personalized learning trajectories and real-time support [5]. These developments suggest that DTs can serve as experimental platforms for modeling and refining SRL strategies by embedding students in interactive simulations that reflect and adapt to their evolving understanding and behaviors. To examine dynamic interactions, we leverage an agent-based model (ABM) [10], which enables controlled simulation of learners progressing through SRL cycles while interacting with varying task demands, peer influences, and instructional feedback. In this

framework, individual agents represent learners with dynamic attributes and behaviors, enabling us to model how SRL processes unfold through interactions with peers, instructors, and varying task demands. We examine the following objectives:

- Research Objective 1: Analyze the impact of social presence (via peer feedback) on learners’ performance.

- Research Objective 2: Investigate how varying task complexity shapes learners’ transitions across SRL phases and the overall performance.

III. METHODOLOGY

To investigate the dynamics of student learning behavior in complex and interactive environments, we developed an agent-based simulation model using NetLogo, a widely used platform for simulating natural and social phenomena [11, 12]. NetLogo provides a simulation environment for modeling individual agents (in our case, students) and their interactions within a specified spatial and behavioral framework [13]. In our model, each student is represented as a turtle —NetLogo’s term for an individual agent that can move, situated within a two-dimensional grid of patches that represents the MOOC learning environment. Each student agent embodies cognitive and metacognitive processes observed in human learners, such as task understanding, self-efficacy, effort allocation, and strategy adaptation. Figure 1 presents the initial setup of the simulation interface, depicting students within a MOOC environment. On the left-hand side of the interface, several interactive controls are included to adjust key parameters that influence student behavior and learning outcomes. These include:

- Task Complexity: A slider that determines the overall difficulty of learning tasks across the MOOC environment. This affects how much challenge each patch presents to student agents. Internally, this is linked to the challenge-factor variable and determines the challenge intensity assigned to each patch. Students move through the environment, encounter challenges, and adjust their academic performance based on internal and external factors. The task-complexity-slider ranges from 0 to 1, with 0 representing no challenge and 1 indicating the highest level of challenge.

- Number of Learners: A slider for setting the total number of student agents in the MOOC environment. This enables the exploration of group size effects and the scalability of peer influence.

- Peer Feedback (On/Off): A boolean switch that enables or disables the role of peer interaction. When activated, the model allows agents to compute peer-influence based on the average performance of nearby agents within a given radius. This influences the agents’ effort adjustment, metacognitive adaptation, and the overall performance.

- Instructor Feedback (On/Off): A switch to control whether students receive feedback from an external source (i.e., instructor). This can be modeled by periodically boosting the performance or metacognitive awareness of selected agents. If enabled, instructor interventions can support students in recovering from low performance.

- Goal-Setting Percentage: A slider that specifies the proportion of student agents who are actively engaged in goal-setting. These agents may demonstrate higher baseline effort or metacognitive awareness, simulating the impact of intentional goal-directed behavior on learning outcomes.

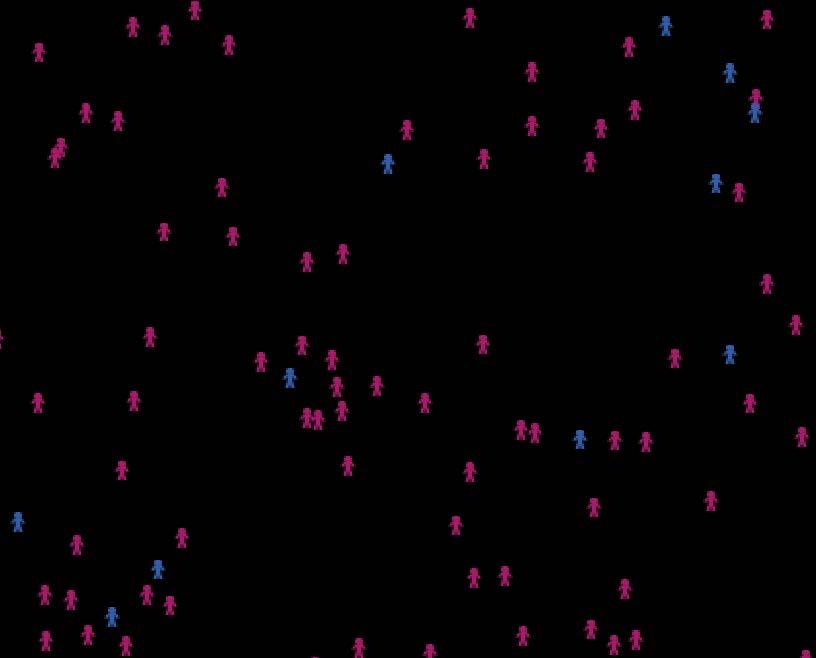

Each of these parameters can be adjusted dynamically, allowing the model to simulate and analyze various learning scenarios within MOOC-like environments. Our model is further developed to simulate the learners’ decision-making and phase transitions across the SRL cycle. The simulation incorporates four primary parameters. The first one represents the four stages of SRL: Task Definition, Goal Setting, Enacting Tactics, and Adaptation. Agent colors = dynamically change to reflect their current phase within the SRL cycle (See Figure 2). In the simulation, each SRL phase is visually distinguished by a unique color to aid interpretation and tracking of learner progression:

- Phase 0 – Task Definition: Green

- Phase 1 – Goal Setting & Planning: Orange

- Phase 2 – Enacting Tactics: Magenta

- Phase 3 – Adaptation: Blue

For example, when a learner shifts from defining the task to setting goals, their color transitions from green to orange. The agent behaviors, phase transitions, and color changes are updated at each simulation tick. One tick represents a single day, reflecting the daily rhythm of SRL, peer interaction, and instructional feedback in a typical MOOC environment.

Fig. 1. Initial setup of the simulation interface depicting students within a MOOC environment.

Additionally, each learner agent possesses a set of internal attributes to model their cognitive and self-regulatory capacities. These attributes are prior knowledge and perceived task clarity (task understanding), self-efficacy (belief in one’s ability to succeed), metacognitive awareness, effort allocation, metacognitive monitoring, and metacognitive evaluation (self-regulatory skills).

Algorithm 1 outlines the steps used to construct the simulation, following the SRL cycle while incorporating both external factors and learners’ internal attributes.

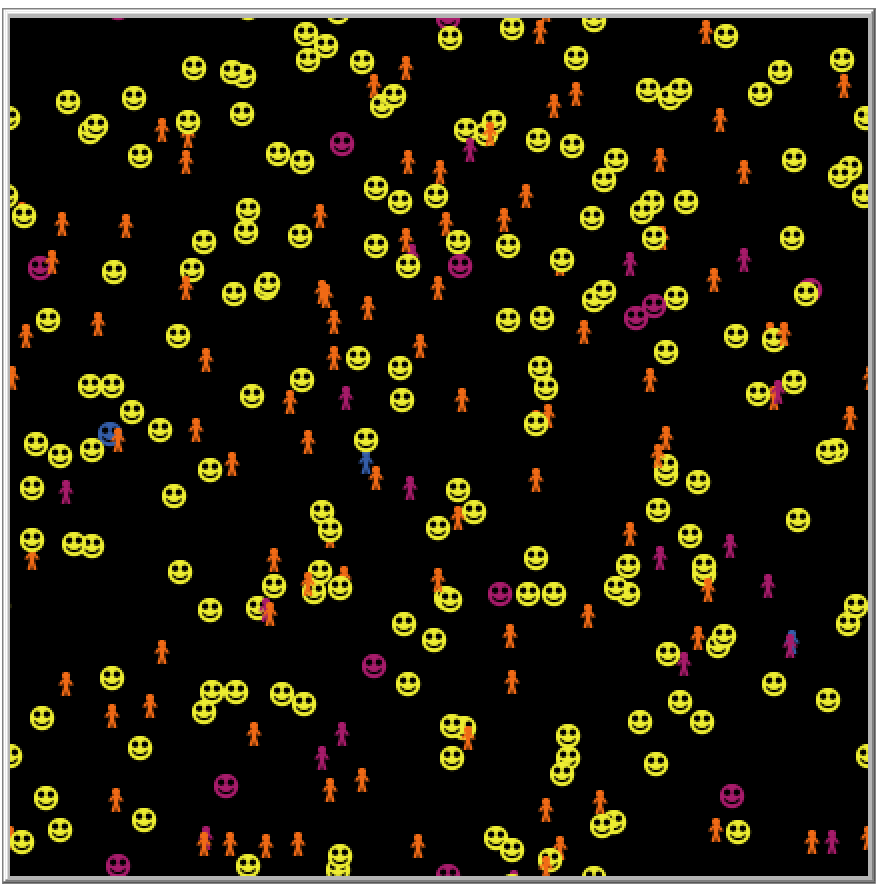

- In Phase 0 (Task Definition), learners assess their understanding of the task. If they have high self-efficacy and clarity, they may skip goal setting. If a learner skips the goal-setting phase, the simulation interface updates their visual representation from a person to a smiley face, indicating confidence or perceived readiness to proceed.

- In Phase 1 (Goal Setting), learners plan their approach. If instructor feedback is enabled, their self-efficacy increases, especially on harder tasks. Learners who performed well in the previous cycle may also gain confidence. Metacognitive awareness increases slightly during this phase.

- In Phase 2 (Enacting Tactics), learners apply strategies and adjust their effort. If peer feedback is available, it gives a small boost to effort and awareness. Effort also increases based on how much capacity the learner has to improve (diminishing returns). Monitoring and awareness continue to grow, and performance is computed as a product of effort, self-efficacy, and monitoring, adjusted for task difficulty.

- In Phase 3 (Adaptation), learners reflect. If their average effort and monitoring are low, they adapt by increasing their self-efficacy and awareness. After this, they start the next SRL cycle.

Fig. 2. Simulation interface showing the majority of students reached Phase 2, while a few reached Phase 3 after the simulation completion.

IV. RESULTS

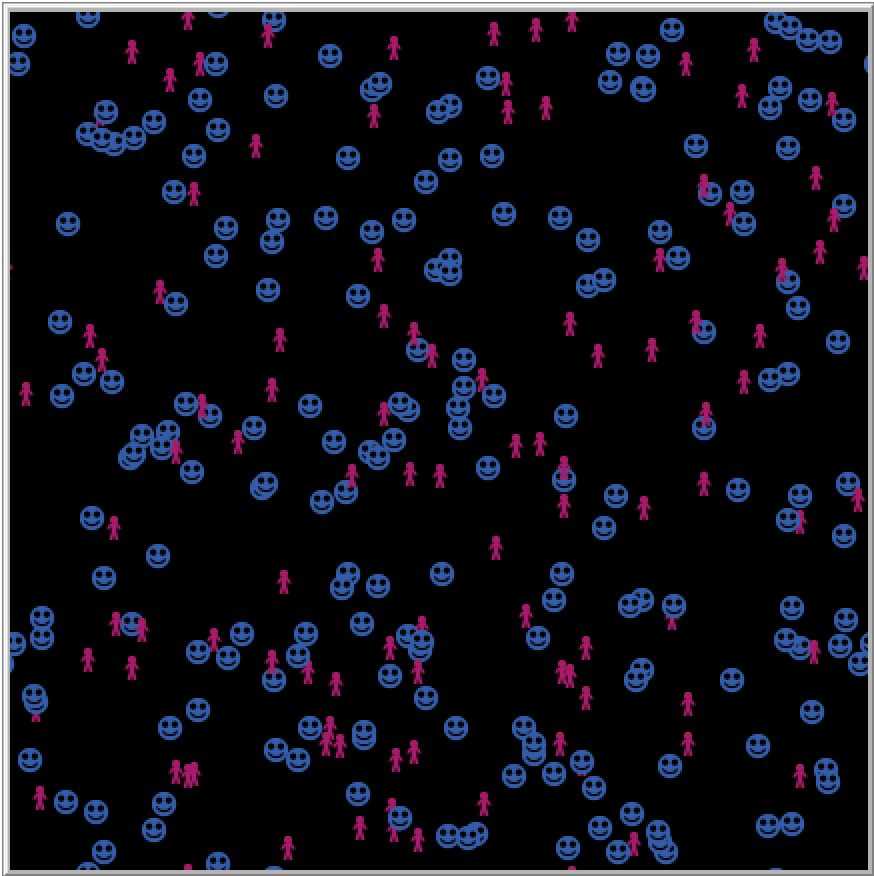

This section explores the learner’s performance at each time step as a composite index derived from effort allocation, self-efficacy, and metacognitive monitoring. The resulting value is bounded within the [0, 1] range and represents a latent measure of effective engagement with the learning task. Figure 3 highlights agents that bypass the goal-setting phase as a result of elevated self-efficacy and clear task perception, denoted by a smiley face icon (transitioning from Phase 0 to Phase 2). This behavior was observed after 10 ticks (interpreted as days), under conditions where task complexity was set to 0 and 1 (i.e., no challenge and highest challenge), and both instructor and peer feedback were enabled. This shows that at the beginning of learning (the first 10 days), the task complexity, high or low, does not have much influence on the phase changes. We can see that most other learners remained in either Phase 1 or Phase 2, with only three learners having reached Phase 3 (Adaptation). After continuing the simulation for 50 ticks, with Task-complexity 0, as shown in Figure 4, the majority of learners progressed to either the Enacting Tactics or Adaptation phases, demonstrating overall advancement through the SRL cycle.

Fig. 3. Output of phase transitions after 10 ticks, under conditions of zero task complexity and when the task complexity is 1, and enabled peer and instructor feedback.

Fig. 4. Output of phase transitions after 100 ticks, under conditions of zero task complexity and enabled peer and instructor feedback.

When testing the effects of task complexity, we compared two scenarios: one with minimal task complexity (set to 0 - See Figure 4) and another with high task complexity (set to 0.9 or 1.0). In both cases, the simulation was executed for 100 ticks. Under low task complexity, learners progressed through the SRL phases more rapidly, with a majority reaching the final phase—Adaptation—within the allocated simulation time.

In contrast, when task complexity was high, most learners remained in the third phase—Enacting Tactics—and several learners were still observed in the Goal Setting phase. This output suggests that higher task complexity causes cognitive and metacognitive challenges that may slow down learners’ progression through the SRL cycle. This highlights the importance of balancing challenge with support, as complex problems may hinder learners’ ability to complete the full cycle of self-regulation within a short time span.

When the task complexity is set to 0.9 - 1, learners’ performance drops to minimal levels, often approaching zero. These results suggest that when task complexity is high, substantial cognitive input is necessary to apply SRL strategies effectively. Despite having mechanisms for goal setting, effort allocation, and metacognitive adaptation, as well as the support from peers and the instructor, the overwhelming nature of highly complex tasks appears to suppress performance gains. These results underscore the critical role of appropriately calibrated task difficulty in supporting effective learning progression.

During our simulation runs, we observed that learners who completed the goal-setting phase consistently demonstrated higher performance compared to those who skipped it—except in scenarios where instructor feedback was disabled. In that condition, the performance gap between goal-setters and nongoal-setters diminished or disappeared. Performance decreases as task complexity increases, aligning with cognitive load theory and common expectations in learning systems. This decline is most pronounced in conditions where no feedback is provided. When both peer and instructor feedback are available, learners achieve the highest overall performance, particularly at low to moderate levels of task complexity. These findings suggest that targeted feedback strategies are essential for maintaining learner engagement and performance in challenging learning environments. The level of task complexity is also critical in creating a supportive learning environment, ensuring that students are challenged in ways that promote growth rather than causing them to disengage or quit due to overwhelming demands they are not prepared to handle environments. The level of task complexity is also critical in creating a supportive learning environment, ensuring that students are challenged in ways that promote growth rather than causing them to disengage or quit due to overwhelming demands they are not prepared to handle.

To confirm these results and compare the total learning effectiveness under each condition by integrating performance over the complexity range, we computed the Area Under Curve (AUC), which confirms our previous analysis that the presence of both peer and instructor feedback supports learners’ overall performance.

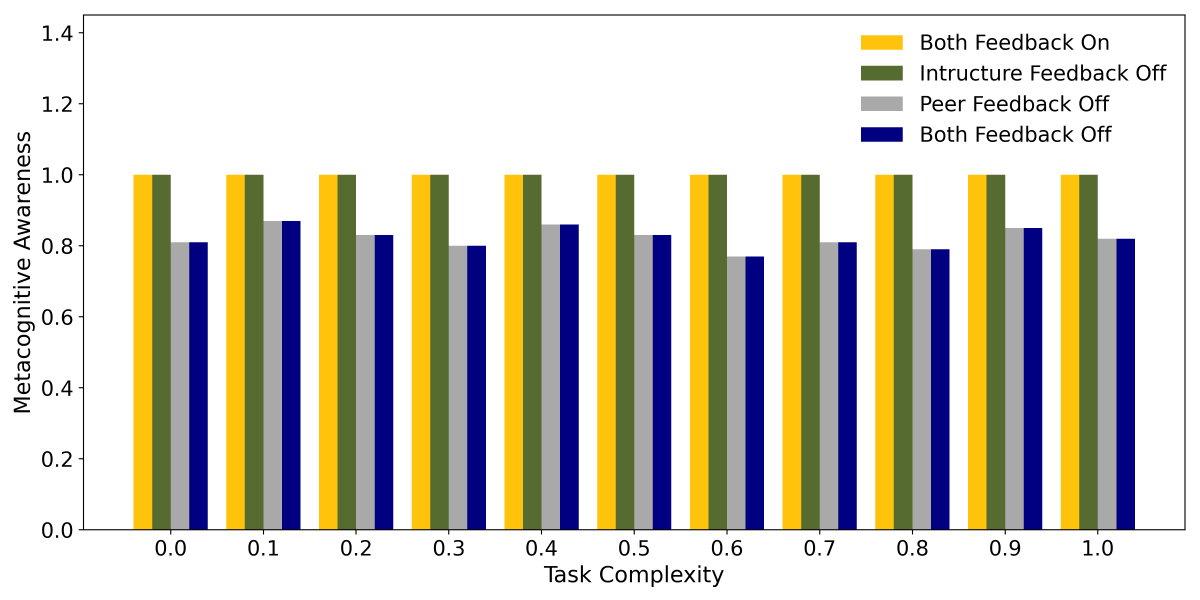

Figure 5 shows that peer feedback drives metacognition to its peak, regardless of instructor presence. Without peer feedback, metacognitive awareness stays in the 0.8–0.85 range. Instructor feedback alone does not raise metacognition beyond peer-independent levels. This suggests that social presence, modeled through peer interaction, plays a critical role in fostering learners’ reflective thinking and strategic engagement.

Fig. 5. Metacognition Awareness output generated after 100 ticks, under various conditions (1) When both feedback types are enabled, (2) when peer feedback is enabled and instructor feedback is off, (3) when instructor feedback is on and peer feedback is disabled, and (4) when both feedback types are disabled.

V. CONCLUSION

This paper develops an agent-based simulation model that integrates the SRL framework with task complexity and the CoI constructs of teaching presence and social presence to better understand learners’ performances in a typical MOOC situation. By simulating learners’ progression through SRL phases in a DT environment, our model offers new insights into how instructional and social feedback interact with task demands to shape learning trajectories, which have previously been underexplored in DT-based educational research. Our findings extend prior work on SRL and co-regulation [8] by illustrating that feedback mechanisms—particularly the combination of instructor and peer feedback—play a critical role in sustaining learners’ performance and metacognitive engagement under increasing task complexity. Goal setting emerged as a consistently beneficial self-regulatory strategy. However, its benefits were amplified when instructor feedback was present, underscoring the synergistic potential of teaching presence and self-regulatory planning. These findings highlight the importance of integrating goal-setting mechanisms and multimodal feedback into learning systems to promote adaptability and performance, particularly in self-paced MOOC settings. Finally, we have observed the dominant role of peer feedback in activating metacognitive processes, emphasizing its importance in the design of collaborative SRL environments. This work contributes to a growing line of research exploring how DTs can serve not only as predictive models but also as experimental platforms for testing and refining adaptive learning settings. Future work incorporates conducting verification and validation through empirical studies.

(Please note that this is an abridged version of the paper. You may read and download the full version below.)